A cool new fling was just released i.e. DRS entitlement viewer. As stated on fling site

“DRS entitlement viewer gives the hierarchical view of vCenter DRS cluster inventory with entitled CPU and memory resources for each resource pool and VM in the cluster”.

Good thing about this fling is that it will be deployed as HTML5 client plugin, which enables you to enjoy its features from vSphere H5 client itself. As requirement we need to have “vCenter server Appliance 6.5 or 6.7”. Let us take a look at how to deploy. I fyou ask me, it is pretty easy and quick.

-

Fling Deployment

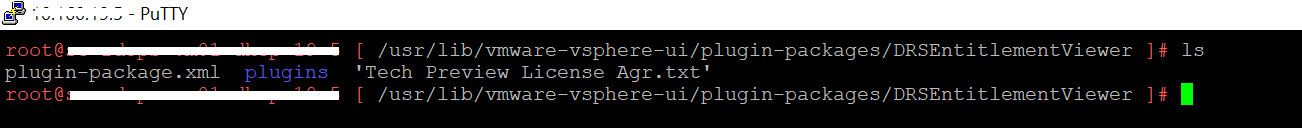

Step 1: Unzip the plugin package (downloaded zip bundle from fling site) to directory “/usr/lib/vmware-vsphere-ui/plugin-packages/” . Below is how it should look like. Note the exact fling directory and its content.

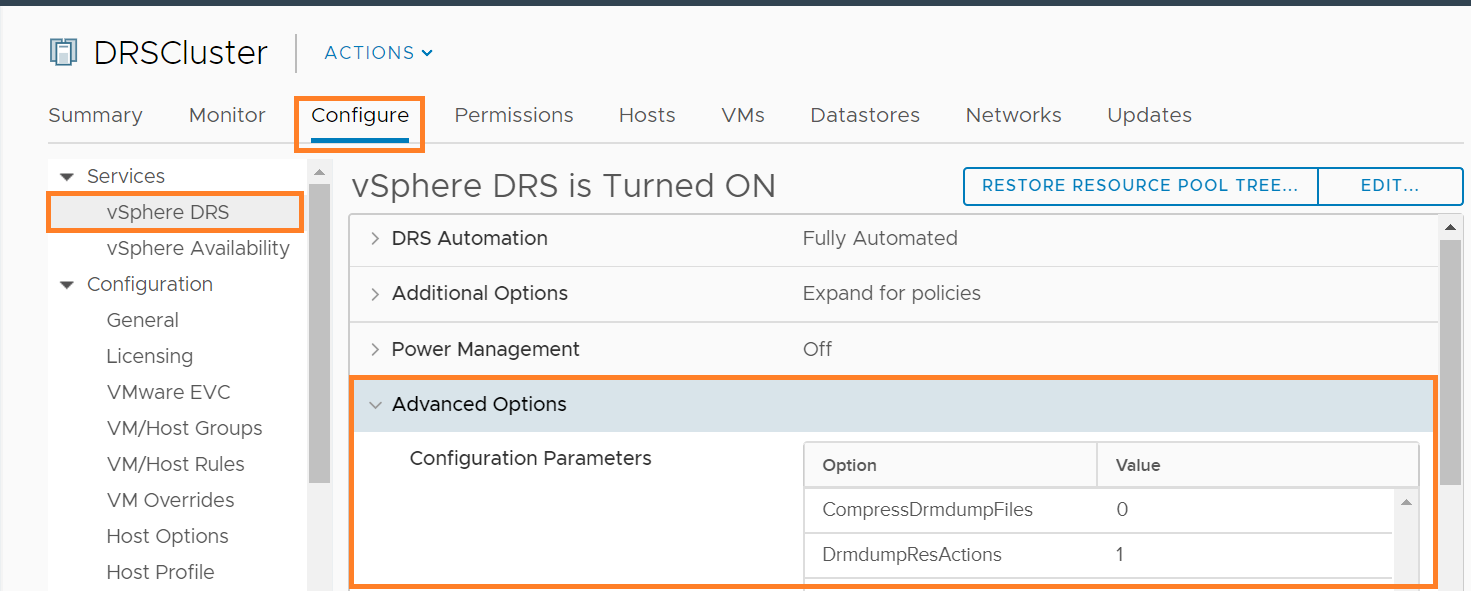

Step 2: Setting advanced options “CompressDrmdumpFiles set to 0” and “DrmdumpResActions set to 1”. Below is how it looks.

You can also add these DRS cluster level advanced options using below PowerCLI code. You can extend this code to configure these options on all the DRS clusters in your vCenter server.

[powershell]

$Cluster = Get-Cluster "Your cluster Name"

$Cluster | New-AdvancedSetting -Name CompressDrmdumpFiles -Value 0 -Type ClusterDRS

$Cluster | New-AdvancedSetting -Name DrmdumpResActions -Value 1 -Type ClusterDRS

[/powershell]

Step 3: Restart vSphere H5 client service using below commands.

service-control –stop vsphere-ui

service-control –start vsphere-ui

Note: before stop or start, there are 2 hyphens.

Above step can be automated using vCenter REST APIs as well (either using PowerCLI or any other programming language without SSH connection)

I think, even first step can be automated using “ExtensionManager() vSphere API” without making any SSH connection to vCenter server appliance. (more on this in future post)

-

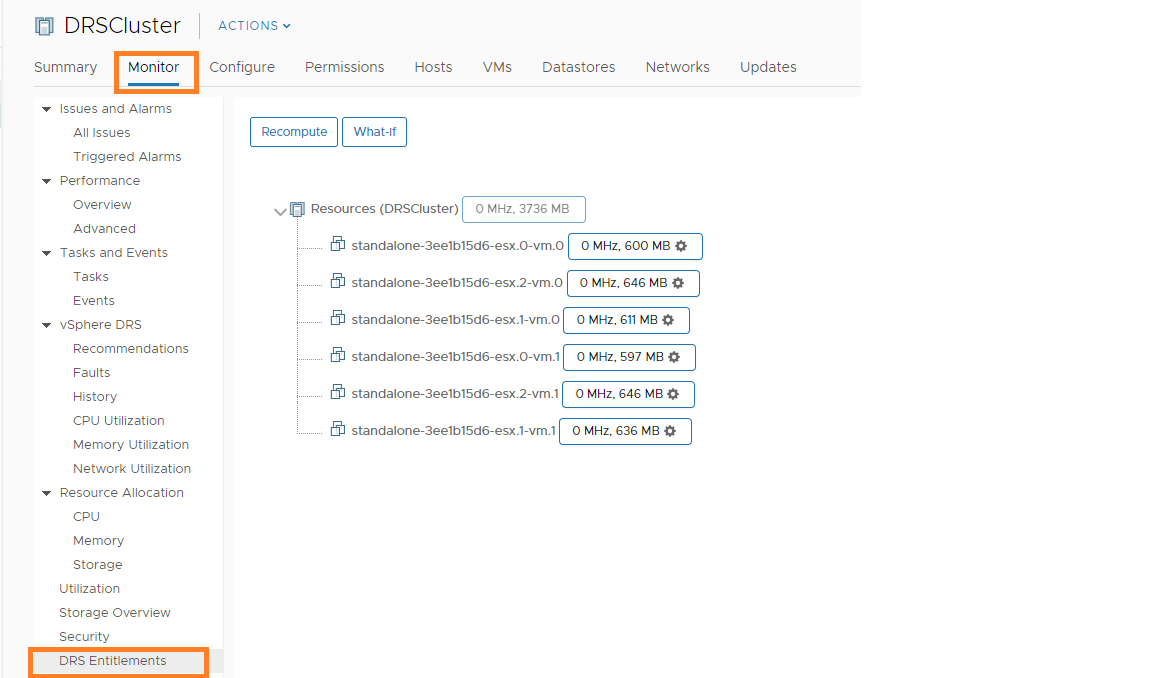

Fling features

As soon as vSphere H5 client is up, we should be able to see the new “DRS entitlements” section under the “Monitor” tab for each Cluster. When I logged into my local H5 client, I was able to see below DRS entitlement from CPU and Memory perspective.

You can see DRS entitlement for every VM is showcased. You may wonder how come CPU entitlement is 0 MHz?. The reason is that, all VMs are completely idle, hence that is absolutely expected. As a first step, you just need to click on “recompute” to get the current DRS entitlement across all VMs/ResourcePools. Note that when you actually do some RLS changes on clustered VMs/RPs and you would like to see updated DRS entitlement, you will have to either click “Run DRS” or wait till default DRS execution (every 5 min).

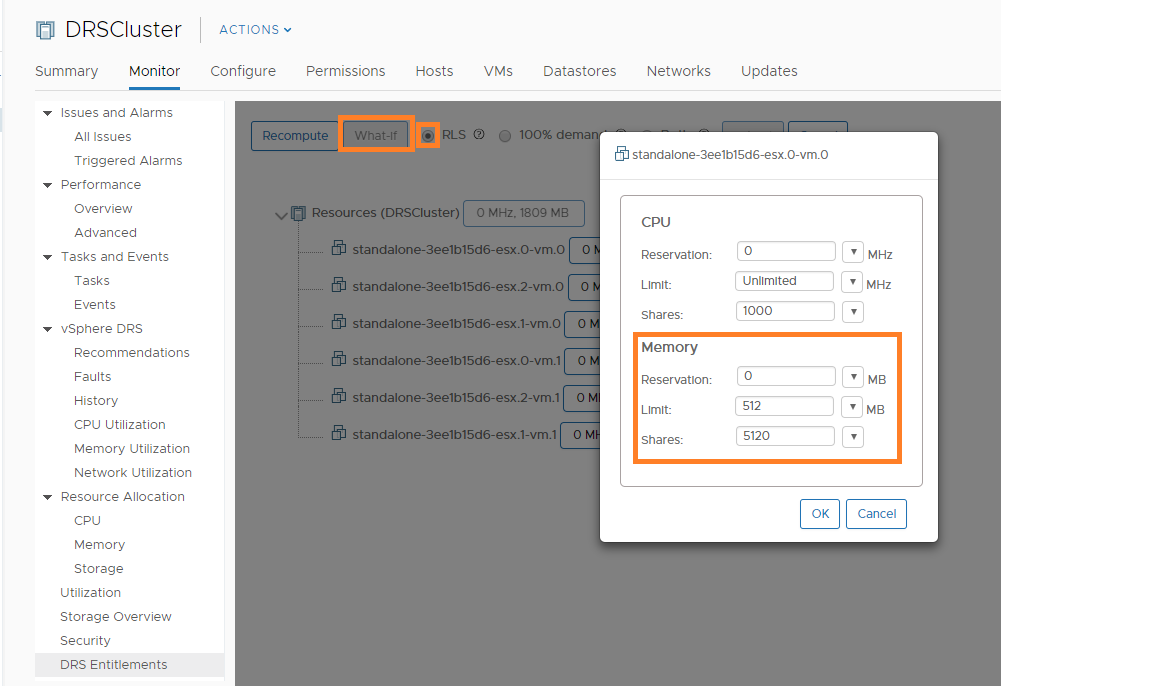

One of the most impressive features of this fling is having ability to do “What if” analysis. Below 3 super cool scenarios are supported.

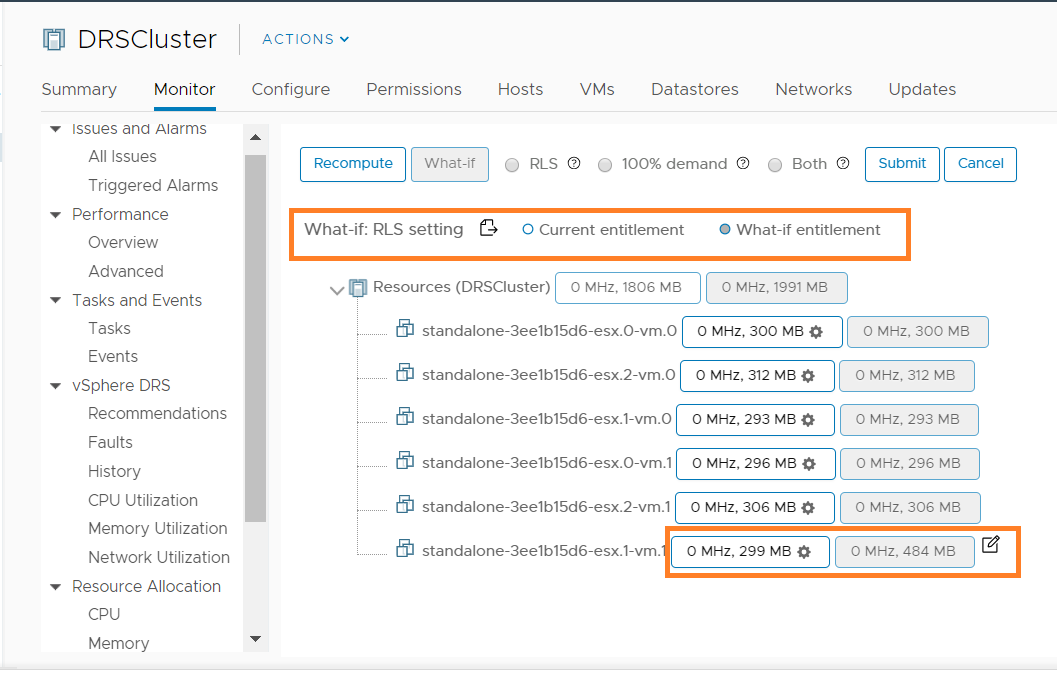

#1. What if user modifies RLS settings across VMs or resource pools across clusters. (RLS : Reservation, Limit, Shares). To try out this, first select RLS radio button and you can choose to simulate the RLS settings for one or more VMs/RPs (there is settings icon for every VM/RP except root). Finally click on “submit” button to get an idea on change in DRS entitlement for those VMs/RPs for which RLS settings were modified. Please take a look at below couple of screenshots to understand this better.

I simulated one of VM’s memory reservation to “500 MB” and once I “submit” this change, below is how What if analysis looks like.

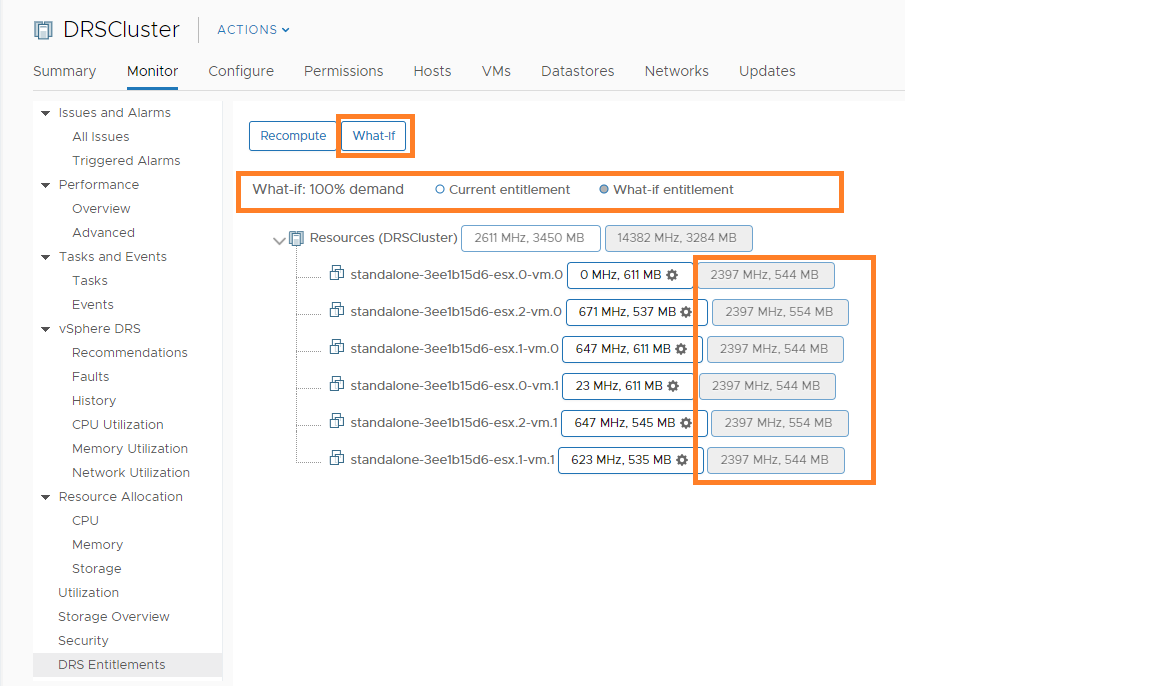

#2: What if all the VMs’ resource demand is at 100%. I did try this analysis and below is how it was looking like. How cool is that?

#3: You can have What if analysis for both RLS and 100% both at the same time

Note: As specified above, RLS changes are just simulations, it does not actually get updated on VMs/RPs. How cool it is to do this analysis without even updating actual RLS settings on cluster! Also, I would highly recommend you to read the user guide to understand all What if scenarios in detail and rest of the features.

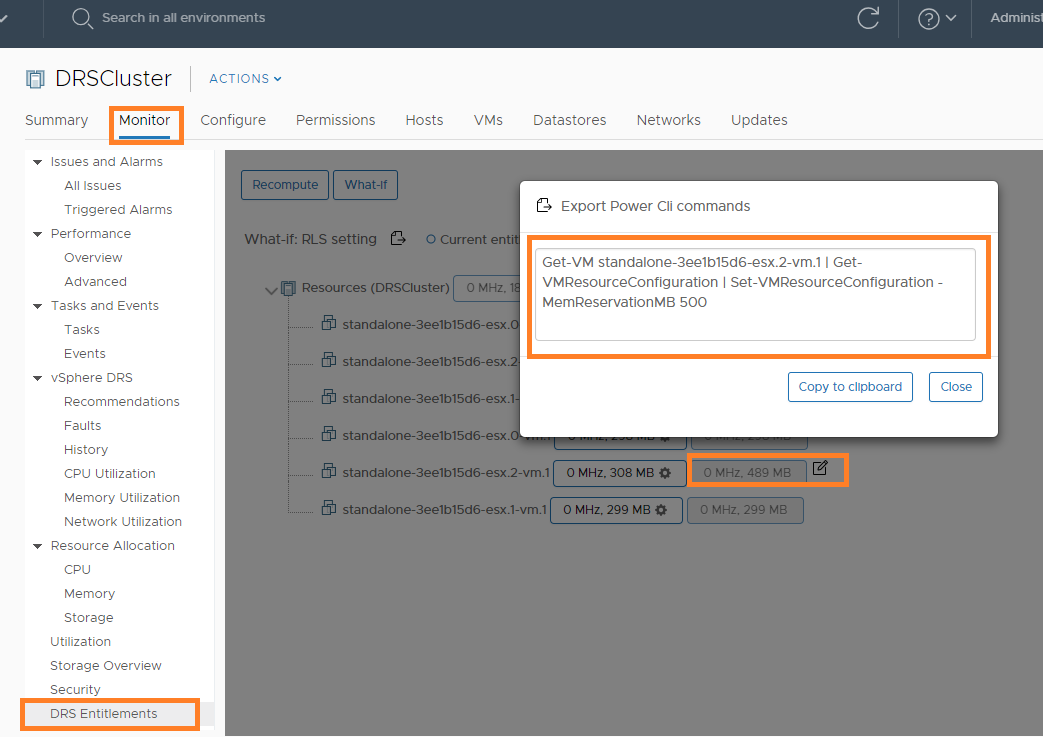

Another impressive feature is to have option to get “PowerCLI” code snippet to actually update RLS settings once your analysis is done. Please take a look at one of the examples.

I am sure this fling would be very handy for you. If you have any doubts or need any help, please leave the comment.

Below are some of the very handy DRS flings

Vikas Shitole is a Staff engineer 2 at VMware (by Broadcom) India R&D. He currently contributes to core VMware products such as vSphere, VMware Private AI foundation and partly VCF . He is an AI and Kubernetes enthusiast. He is passionate about helping VMware customers & enjoys exploring automation opportunities around core VMware technologies. He has been a vExpert since last 11 years (2014-24) in row for his significant contributions to the VMware communities. He is author of 2 VMware flings & holds multiple technology certifications. He is one of the lead contributors to VMware API Sample Exchange with more than 35000+ downloads for his API scripts. He has been speaker at International conferences such as VMworld Europe, USA, Singapore & was designated VMworld 2018 blogger as well. He was the lead technical reviewer of the two books “vSphere design” and “VMware virtual SAN essentials” by packt publishing.

In addition, he is passionate cricketer, enjoys bicycle riding, learning about fitness/nutrition and one day aspire to be an Ironman 70.3