vSphere 6.7 supports one of the exciting technologies on computer memory i.e. Non-Volatile Memory (NVM) or persistent memory (PMem). In this post my focus is on briefing what is PMem technology and explore how DRS works with PMem configured VMs.

What is Persistent Memory (PMem)?

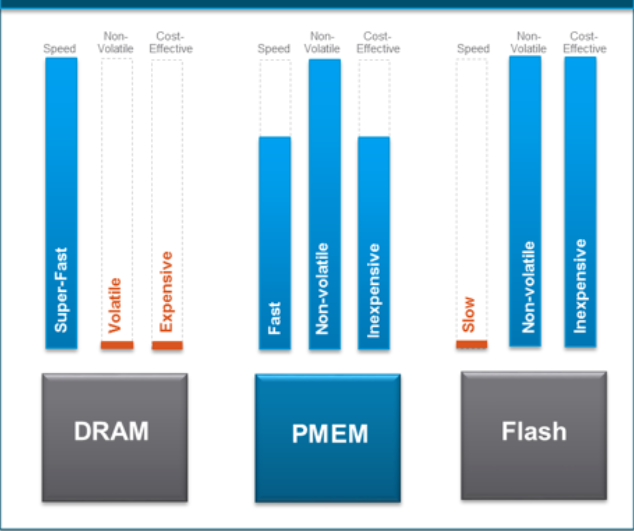

In brief, PMem is next generation memory technology whose data transfer speed is as good as DRAM (50-300 ns, 100 times faster than SSDs) and unlike DRAM, it can even retain the data after reboots . If you ask me, it is going to greatly improve the performance of applications running performance sensitive workloads ex. analytics and acceleration databases etc. The virtual machines, which require low latency, high bandwidth and at the same time persistence are right workloads to be benefited from this technology.

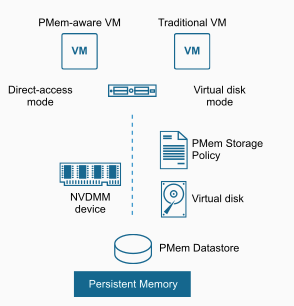

Below diagram highlights the 2 modes supported by vSphere.

i) Direct-access mode (vPMem): It requires hw-version 14 and PMem-aware VMs ex. Windows server 2016 or RHEL 7.4.x

ii) Virtual Disk mode (vPMemdisk): This works with legacy/traditional VMs, no requirement of having hw version 14 and VM need not to be PMem aware.

If you want to understand more about PMem technology , I would recommend you to watch this youtube presentation by VMware’s CTO Richard B.

vSphere DRS interop with PMem VMs

First of all, I deployed one quick lab setup: 1 vCenter, 3 ESXi hosts and simulated PMem on 2 out of 3 hosts. All these 3 hosts are inside the DRS enabled cluster. Since I do not have any PMem aware VMs, I explored PMem in vPMemdisk mode i.e. Virtual Disk Mode pointed above

Note: I did simulate PMem on 2 hosts using a cool hack provided by engineering i.e. by running this esxcli command “esxcli system settings kernel set -s fakePmemPct -v 33”. Note that simulation must be used for lab or educational purposes only. William has written a nice article on the same as well.

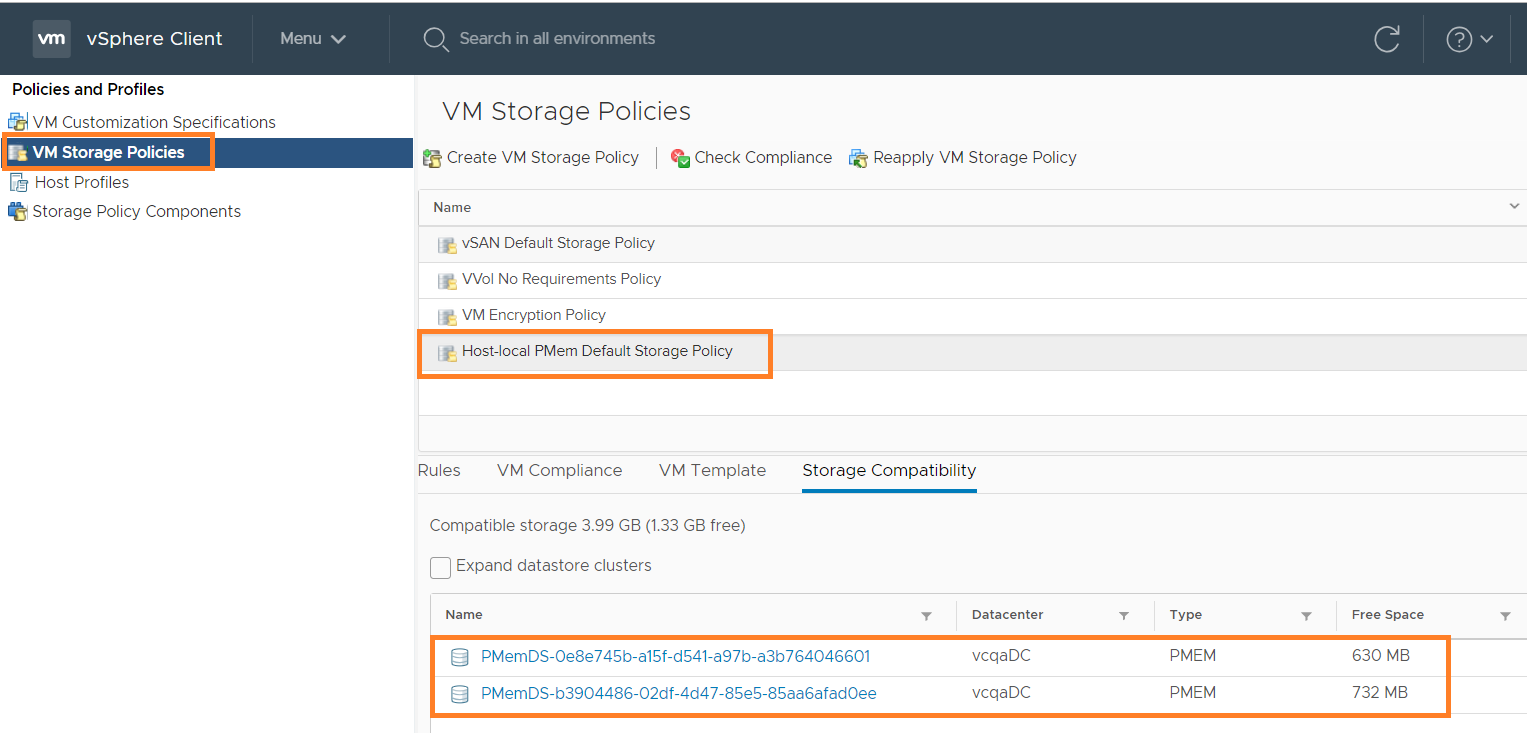

Below is how the PMem storage policy and PMem local datastores look like

Now that we have required PMem simulated hosts, lets start exploring how DRS works with PMem. Since I did not have any PMem aware guest, as I said earlier, I explored PMem with DRS in virtual disk mode described above i.e. vPMemDisk, which works fine for legacy guest OSes. Below are my observations on the same.

i) VM creation work-flow (vSphere H5 client)

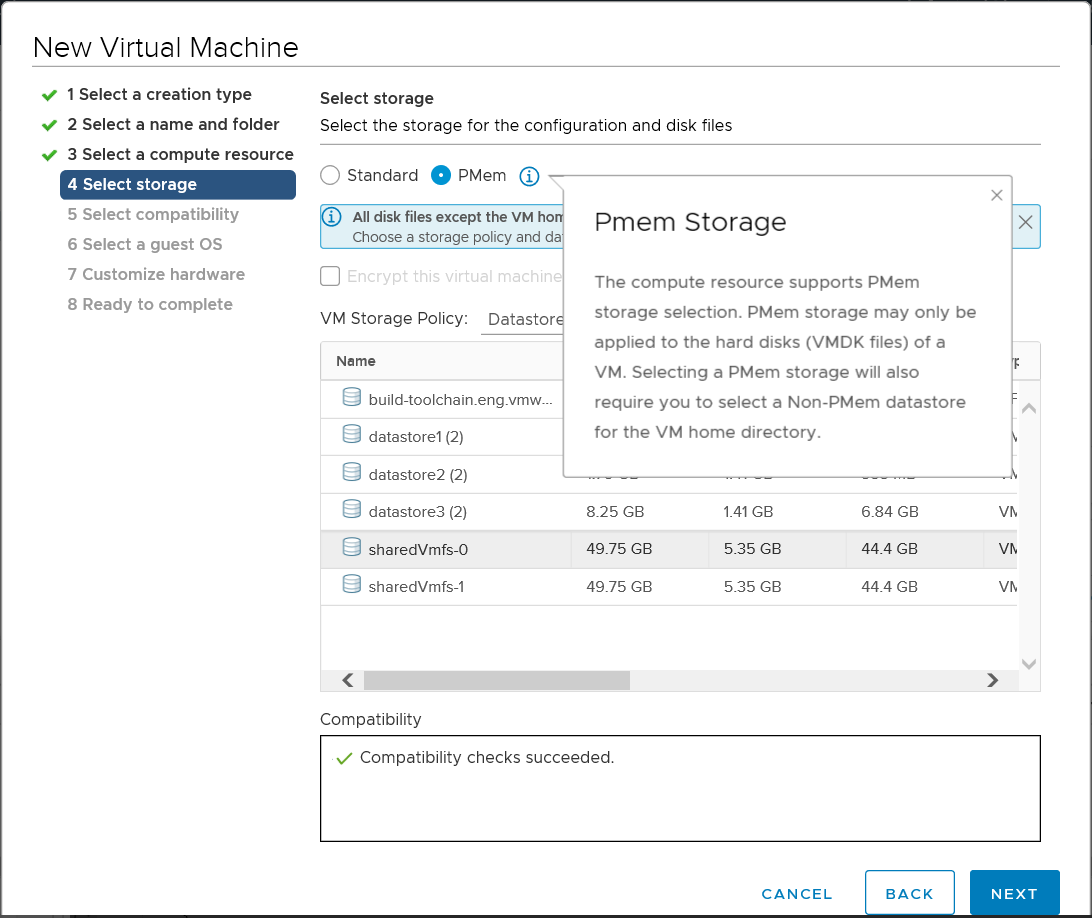

I created a VM with PMem of size 512 MB on DRS cluster. Below screenshot shows how PMem can be selected as storage.

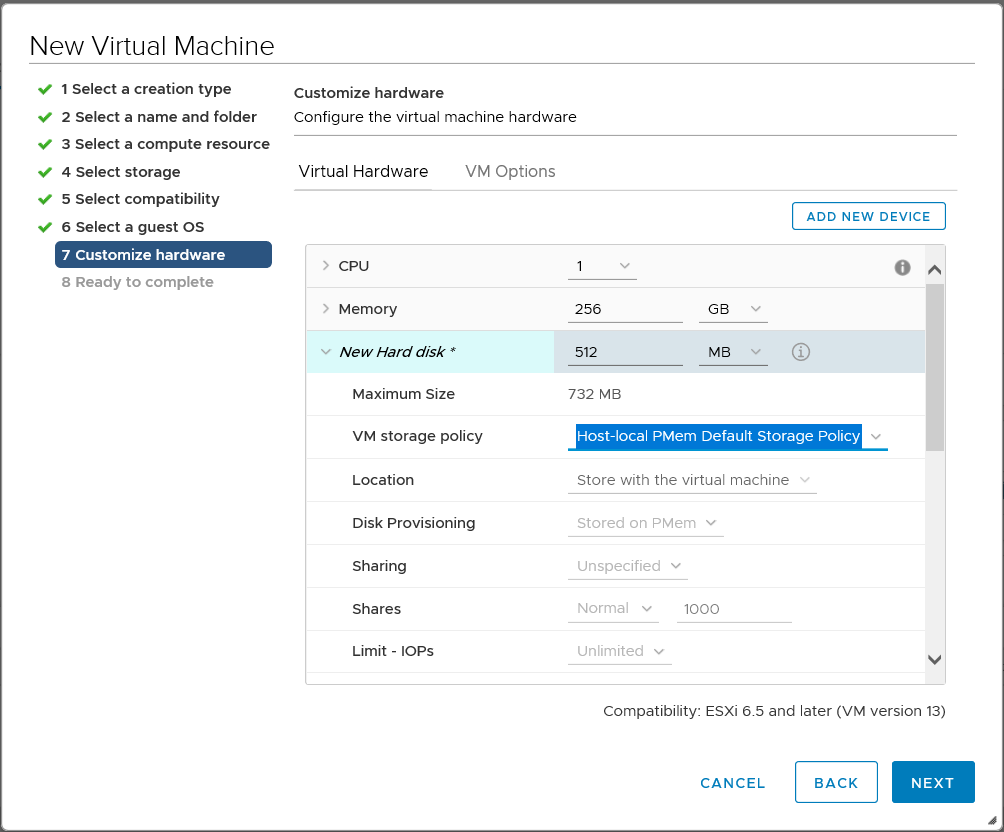

Below screenshot shows how PMem storage policy comes into picture. This policy gets selected automatically once you select “PMem” as shown above.

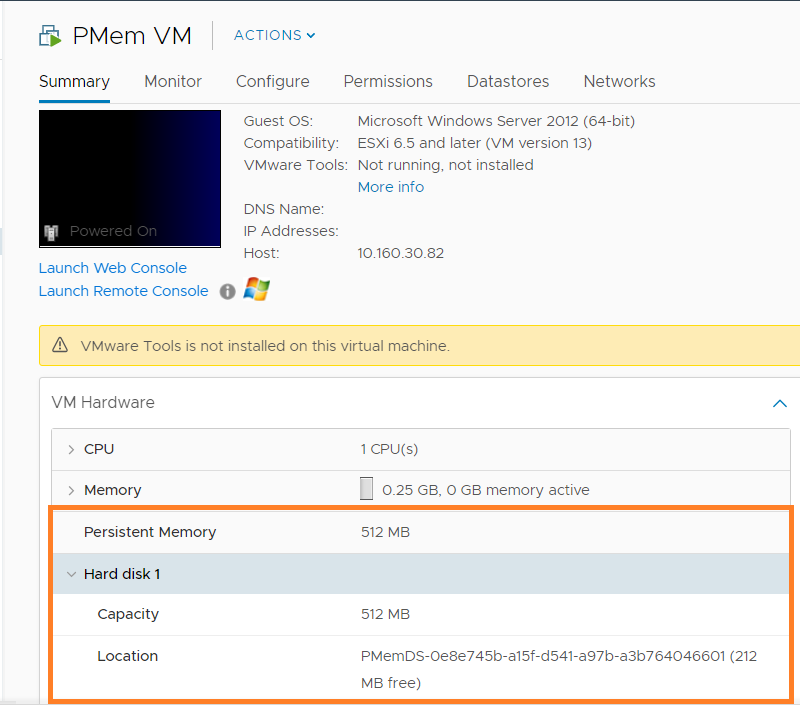

Now lets take a look at the VM summary.

ii) DRS initial placement

- The VM I initially created got registered on one of the hosts with PMem storage and I observed that DRS places (once powered on) the VM on same host, where it was residing.

- Then I created 2 PMem (same vmdk size) configured VMs and powered on. I see that DRS placed VMs on 2 separate hosts as expected.

iii) Host maintenance mode

- I had 2 hosts with PMem and a host without PMem. I put one of the hosts with PMem into maintenance mode, where one of the PMem configured VMs was residing. I observed that DRS migrated the VM to another PMem host as expected.

- Then I went ahead and put even 2nd host into maintenance mode. Since there is no any host with PMem by now, I observed 2nd host can not be put into maintenance mode.

iv) DRS Affinity rules

- I played around both VM-VM and VM-host rules and I observed that DRS tries to satisfy rules but never migrates PMem configured VM to non PMem host even though rules gets violated.

v) DRS load balancing

I could not explore more on DRS load balancing with PMem VMs but what I have observed is that DRS prefers recommending non-PMem migrations more overs PMem VM migrations. If it is absolutely needed, only then DRS recommends PMem VM migration to other compatible hosts.

Notes on PMem

- With vSphere 6.7, only one PMem local datastore is allowed per host.

- Normal datastore operations are not available on PMem datastores and vSphere H5 client also does not list PMem datastores under regular datastore views. Only operation available is monitoring stats.

- Monitor PMem datastore stats using ESXCLI

- Host-local PMem default storage policy can not be edited. Also, user can not change the PMem VM storage policy. In order to change, user either needs to migrate or clone that VM

- PMem will be reserved no matter VM is powered on or not

- Though PMem can be configured on standalone hosts. However, it is highly recommended to use DRS.

- DPM (distributed power management) supports PMem as well.

Official documentation can be found here & here

I hope you enjoyed this post. Please stay tuned for my another blog post on PMem, where I will discuss about creating PMem VMs using API.

Vikas Shitole is a Staff engineer 2 at VMware (by Broadcom) India R&D. He currently contributes to core VMware products such as vSphere, vSphere with Tanzu and partly VCF & VMware cloud on AWS. He is an AI and Kubernetes enthusiast. He is passionate about helping VMware customers & enjoys exploring automation opportunities around core VMware technologies. He has been a vExpert since last 10 years (2014-23) in row for his significant contributions to the VMware communities. He is author of 2 VMware flings & holds multiple technology certifications. He is one of the lead contributors to VMware API Sample Exchange with more than 35000+ downloads for his API scripts. He has been speaker at International conferences such as VMworld Europe, VMworld USA & was designated VMworld 2018 blogger as well. He was the lead technical reviewer of the two books “vSphere design” and “VMware virtual SAN essentials” by packt publishing.

In addition, he is passionate cricketer, enjoys bicycle riding, learning about fitness/nutrition and one day aspire to be an Ironman 70.3